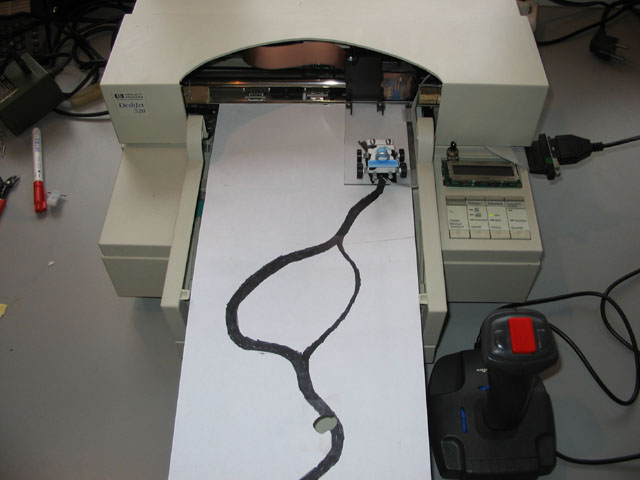

The autonomous robot “Don Quichotte” (Don Quixote in English spelling) was designed to compete in the “c’t magazine cleaning robot contest” held on October 14-19, 2001 on the Systems trade show in München Germany. The idea was to build a versatile robot that is not only capable to solve the tasks defined by this specific contest, but that can be easily reprogrammed for other tasks.

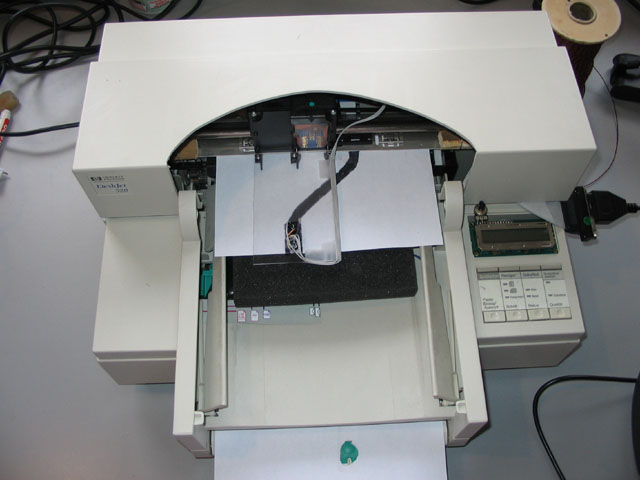

Robot without front scoop

Don Quichotte Fact Sheet

| Measurements (lxbxh) |

30 x 33 x 18 cm (main unit)

30 x 22 x 13 cm (Front scoop)

140 cm (height of camera) |

| Wheel diameter |

15 cm (main)

3 cm (castor) |

| Weight |

9 kg (including 2.5 kg laptop) |

| Material |

Plywood, wood |

| Battery |

12v 7.2Ah sealed lead-acid |

| Operating time before recharge |

>1.5 hours |

| Speed |

25 cm/sec (1 km/h) |

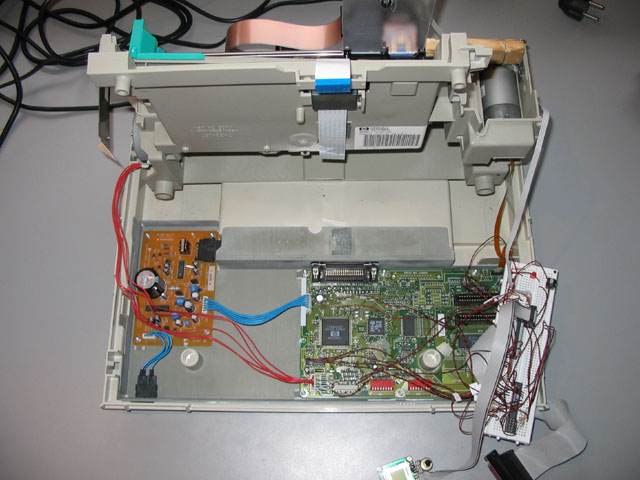

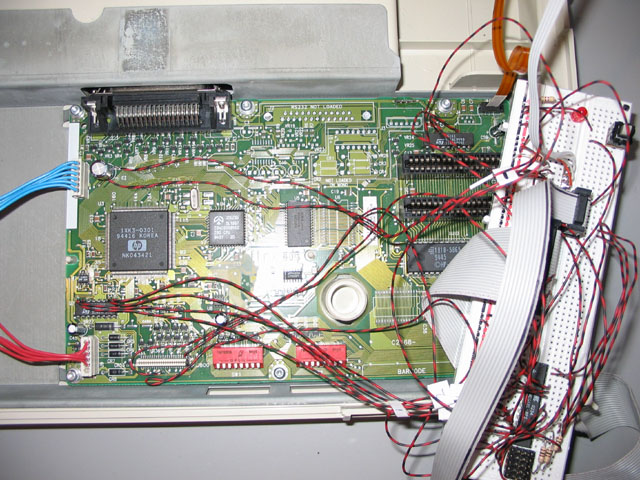

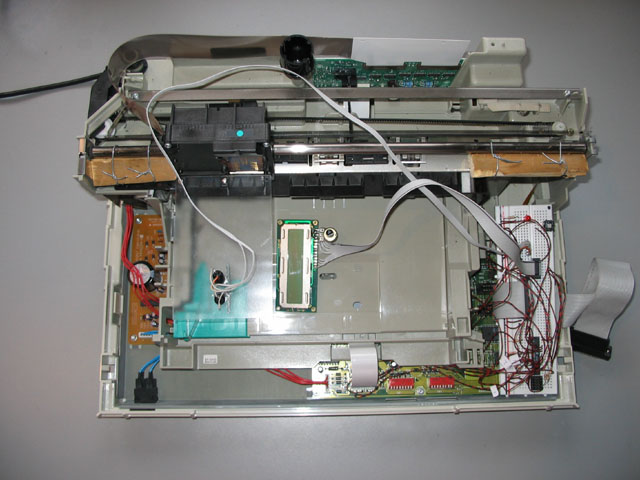

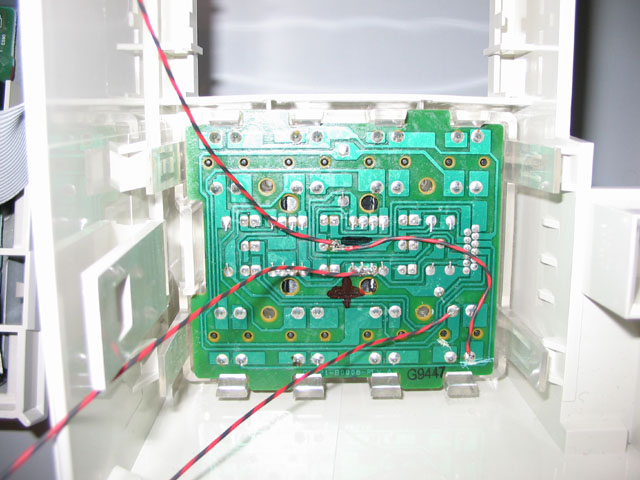

| Electronics |

Dell Latitude CPiA Celeron 366/64MB laptop

Logitech Quickcam Pro3000 USB camera

IPC@Chip SC12 microcontroller

L298 dual motor driver

Robbe S161 RC-Servo

Two automotive rear view window wiper motors |

| Software |

Laptop: Custom program in C++ (MS-Visual C++), Logitech SDK, Intel Image Processing Library

Microcontroller: Custom program in C (Borland Turbo C) |

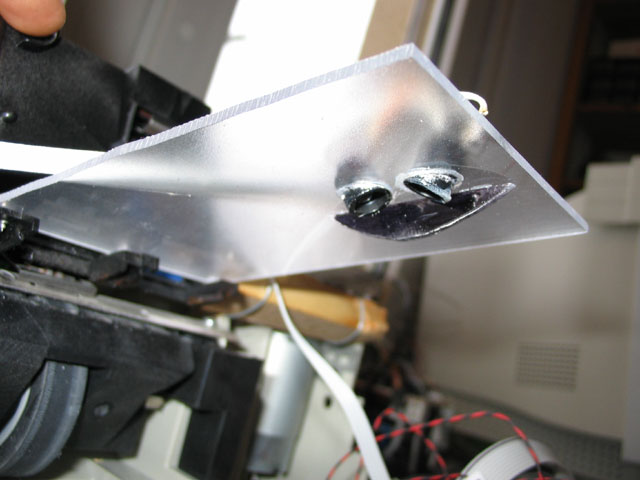

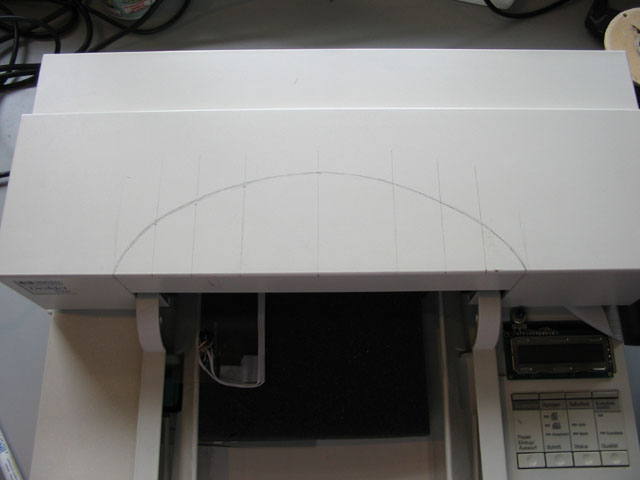

Construction

Don Quichotte is contructed out of plywood and wood. A bumper in front connected to two micro switches senses forward collisions. A scoop made out of cardboard and soft PU foam is mounted to the bumper. Mobility is provided by differential steering on two 12volt automotive rear window wiper motors. The motors are driven by a L298 dual motor driver, which in turn is controlled by the SC12. Power for the motors is provided by a sealed lead accu. A Logitech QuickCam Pro 300 is mounted on the robot with a wooden stick, at about 140cm above ground level. The camera angle can be changed with a RC-servo, controlled by the SC12. A Pentium Celeron 366MHz/64MB laptop is mounted on top of the robot. The laptop is powered by it’s own batteries. The laptop is connected to the SC12 microcontroller by RS-232 at 19200 baud, the bumper sensors are connected via the Parallel port.

Sensors

The main sensor is the USB camera mounted on the robot. The camera vision angle is controlled by a convential RC-servo, driven by a SC12 microcontroller. The front scoop is equipped with two micro switches in order to detect collision with non moving items. The state of the micro switches can be read by the laptop via the parallel port. Light items, in the case of the contest the empty cans and cigarette boxes, will not trigger the collision detection sensor. These items will be pushed by the robot to the target area. The wheels have mechanical rotation sensors in order to obtain an approximate position of the robot. The rotation sensor switches 6 times on and off per revolution of a main wheel, output is sent via the parallel port to the laptop.

The Task

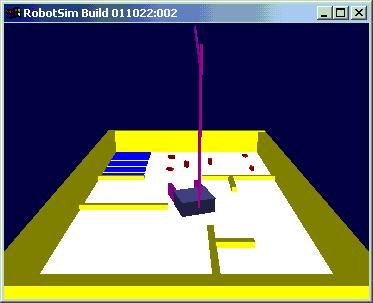

The robot is placed in an 3 by 2 meter arena. The arena has an uniform white/gray colored underground and is enclosed with yellow walls enclosed. In the arena several yellow walls are placed to resemble an office floor with approximately 5 rooms. In one of the rooms several red colored items (empty beercans and cigarette boxes) are placed. In the same room a square target area is marked with ground and walls colored blue. The task of the robot is to find its way to the room with the items, and then collect these items and drop them in the target area. The minimal opening between the walls is 40cm, the starting position of the robot is diagonally across from the target area.

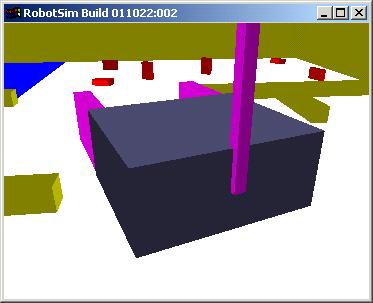

Robot Arena

Software

The robot uses proprietary software on the laptop and microcontroller to process the images from the camera and steer the robot.

On the laptop a Visual C++ GUI program runs.With the GUI the user can calibrate the camera angle, calibrate the camera setting so that colors are recognized correctly, and start/stop or step the robot program. The program generates debug information on screen for each step in the robot program, including the current state and actions. The image frame feed from the camera is also shown, including three additional images showing raw color filtered images, completely processed images including identified object positions, and image giving information about the last program step.

Image Processing

The camera sends image frames to the image processing routine. For the task at hand the robot processes images in three layers: the items to be collected, the target area, and the walls. The processing takes the following steps:

1. Convertion of RGB image to HSV color space.

2. Filter out appropriate color, resulting in a binary layer image (zero color not present and 1 color present).

3. Layer noise filtering

4. Layer feature enhancement.

Noise filtering is performed by a single pixel erode operation followed by a single pixel dilate operation. The erode operation sets the output picture to zero if the corresponding input pixel or any of its 9 neighboring pixels is a zero. The dilate operation sets the output pixel to 1 if the corresponding input pixel is 1 or any of 8 neighboring input pixels is 1. The two operations combined have the effect of eliminating small and thin objects, breaking objects at thin points, and generally smoothing the boundaries of larger objects without significantly changing their area.

Feature enhancement is dependent on the layer involved. For the item layer, containing multiple relatively small objects, no further processing is done. For the target layer, containing a single relatively large object, an 6 pixel dilate operation is followed by a 6 pixel erode. This has the effect of filling small and thin holes in the objects, connecting nearby objects, and generally smoothing the boundaries of objects without significantly changing their area. Walls not noise filtered but are smoothed and connected by a single pixel dilate/erode operation.

Object Position Estimates

The enhanced item and target layer information is fed to a object position estimate routine. The routine calculates the positions (in pixel coordinates) of each object in the image.

The pixel coordinates are then transformed into cm grid coordinates relative to the robot main wheel axis center point. The transformation is depenent on the camera position. The camera has three angle of view position, Down, Near and Far. After calibration of the camera positions, the program is able to calculate the position of objects relative to the robot. The calculated position accuracy is 5 cm or better for the camera Down and Near positions. The width of vision is about 160 cm. The field of vision in forward direction is dependent on the camera position:

Camera Down: -30 to +70cm (relative to the robot main wheel axis center point),

Camera Near: +15 to +110cm,

Camera Far: +60 to 200cm.

At present no position calculation routine is available for line features (walls). For wall avoidance and following a routine is used that counts the number of ones in a specific subarea of the wall layer.

Movement

The robot moves by sending pulses of known length to the two motors. A calibration is made for all eight movement modes in order to be able to translate move and turn commands from cm and degrees to pulse duration in milliseconds. The eight movement modes are: move forward/backward, turn left/right, forward moving left/right turn, backward moving left/right turn. The move command with duration is send by RS-232 from the laptop to the microcontroller. The microcontroller takes care of the exact timing of actual pulses sent to the motors.

Robot Program

The actual robot control program is build up out of several sub programs. The sub programs are:

INIT: Initialization. Run either WALL_FOLLOW or TARGET_FIND.

WALL_FOLLOW: Follow walls until target area is identified. If target identified, store target position and run TARGET_RETURN.

TARGET_FIND: Move until a wall is hit or target is identified. If target identified, store target position and run TARGET_RETURN. If wall is hit, turn randomly.

TARGET_FINDNEAR: Assuming that target is same room. Turn around trying to identify the target, if identified run TARGET_RETURN.

ITEM_FIND: Assuming just dropped an item. Look around and identify closest item.

ITEM_GO: Goto identified item. Update target position with each move.

TARGET_RETURN: Return to memorized target position with item. If target can not be found, run TARGET_FINDNEAR

ITEM_DROP: Drop item in target area, backup, turn 180 degrees, run ITEM_FIND.

Each subprogram consists of steps. A step can be a movement, camera repositioning, or a jump to a next program. Between steps the image frame is updated. While a move or camera command is being executed , the robot program is suspended till after the command is completed and a fresh image frame is available.